In an era where data is considered the new oil, tools for data extraction, manipulation and analysis are seeing a surge in relevance. One such notable tool is AutoGPT, a trending software that has revolutionized the art of data processing, being endowed with an array of powerful features and flexibilities. This piece covers a comprehensive understanding of AutoGPT, its setup, data preparation for its utilization, how it’s applied for data processing, and the advanced techniques to optimize its uses. Whether you’re an enthusiast seeking a ground-level understanding or a professional striving for optimization techniques, this ultimate guide is certain to be your companion in mastering AutoGPT.

Understanding AutoGPT

Understanding AutoGPT: An Overview

AutoGPT is a tool that employs machine learning methods. It’s rooted in a transformer-based machine learning technique developed by OpenAI named Generative Pre-training Transformer (GPT). The underlying goal of this software is to generate meaningful text output equating to human-level capacity. AutoGPT, like its ancestors, is powered by learning data patterns and sequences in the information given to it, making it a revolutionary tool in data processing.

How AutoGPT Works

The working mechanism of AutoGPT involves analysing vast amounts of data to generate an output. When provided with specific data parameters or initial input, the program scans and recognizes possible interconnected threads, branching possibilities and likely results based on the information it has been trained with. The art of data processing is seen in how it utilizes these paths to generate a meaningful, human-like text output.

AutoGPT in Data Processing

In the sphere of data processing, AutoGPT functions as both an enhancement and acceleration tool. It’s particularly useful in processing and interpreting high-volume, complex data sets. AutoGPT can trawl through huge data lakes, deciphering patterns and connections much quicker than a human can, resulting in faster data processing speeds. It’s capable of transforming raw, unstructured data into a clear, comprehendible format through its language prediction models and algorithms.

Advantages of Using AutoGPT for Data Processing

One of the main advantages of using AutoGPT for data processing is its efficiency. Given its ability to process data at a much faster rate compared to manual processing, it significantly cuts down on the time taken to analyze, interpret, and utilize data. This improved speed of execution gives businesses a competitive edge by allowing them to provide quicker responses to changes in trends or market dynamics.

Another advantage is AutoGPT’s remarkable accuracy. By using machine learning techniques, it minimizes the chances of human errors that can occur with manual data processing. This ensures high-quality and reliable outputs, which are crucial for decision-making.

Optimizing AutoGPT Usage in Data Processing

To optimize the usage of AutoGPT in data processing, it is important to first properly understand the format and characteristics of the data to be processed. This understanding affects the use of the relevant model architecture and the training method to be applied to achieve the best results.

When feeding data into AutoGPT, it is vital to ensure the data is clean and relevant. Inaccurate or unnecessary data will only hinder the tool’s effectiveness and might lead to less accurate results.

Adequate training of the AutoGPT models is also key. The more accurately a model is trained with a large, diverse dataset, the more effectively the algorithm can predict future outcomes. Regular updates and retraining of models with fresh data is also needed as data change over time.

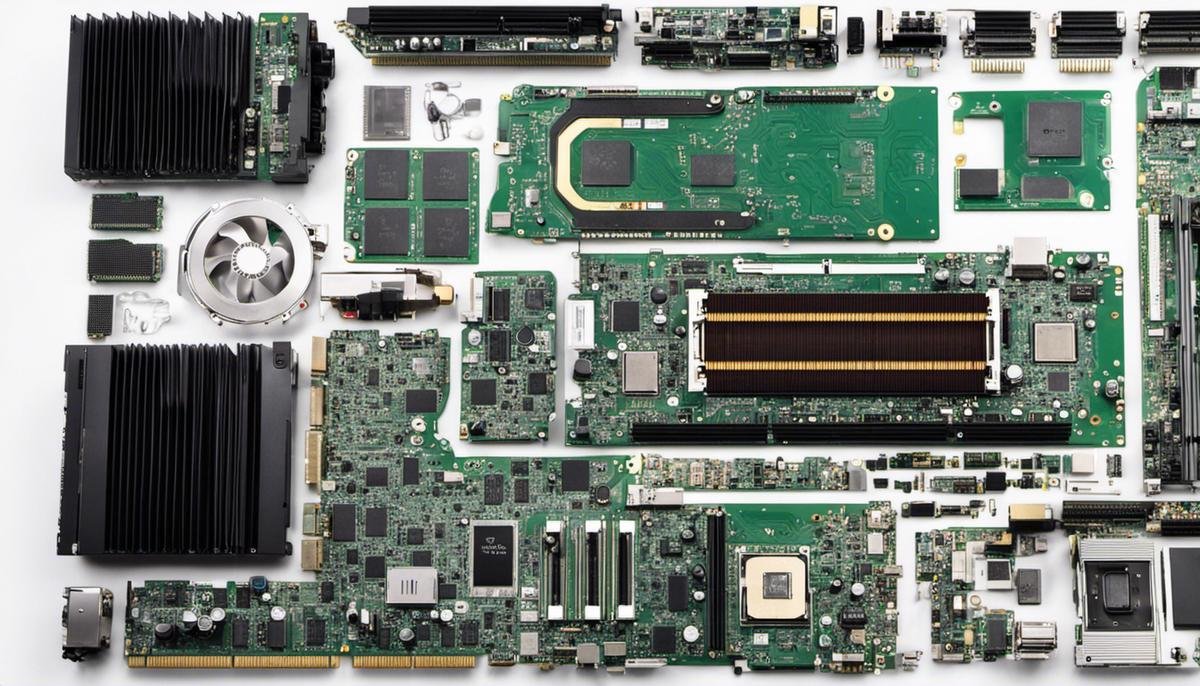

Finally, utilizing the right computational resources like GPU power and sufficient memory is crucial to the smooth operation of AutoGPT. With these resources, AutoGPT can efficiently handle the vast amount of data and computations involved in the processing.

Concluding Thoughts

With its superior efficiency and accuracy, AutoGPT has carved a remarkable space for itself in data processing. By optimizing its usage, it is possible to achieve a higher level of data processing, thereby facilitating a more comprehensive decision-making process.

Setting up AutoGPT

Setting the Stage: Pre-Installation Requirements for AutoGPT

Belonging to OpenAI’s impressive lineup of models, AutoGPT commands a sophisticated computing environment to perform optimally. This essentially means your system should at least have a 16GB RAM, multiple CPUs, and preferably a high-performance GPU to handle resource-intensive tasks. When it comes to software, a compatible version of Python (like Python 3.6 or later) accompanied by pip package manager for the installation of necessary packages is a must.

Installation Procedure for AutoGPT

To install AutoGPT, first, you must have a stable version of Python installed. Then, install pip package manager, a utility tool for Python packages, if it’s not already installed on your system. With pip installed, use it to install the OpenAI gym, the framework where AutoGPT is utilized, by typing the following in the command line: ‘pip install gym.’ Following the installation of the gym, you can now proceed to install AutoGPT using pip: ‘pip install autogpt.’

Configuring AutoGPT for Optimal Performance

After successfully installing AutoGPT, careful tuning of its settings is necessary to ensure optimal performance. First, allocate adequate computational resources. Depending on the model size and your specific task, you should allocate enough system memory and GPU resources to ensure smooth running. Second, adjust the batch size based on your computational resources. Larger models and tasks requiring larger inputs will need smaller batch sizes, whereas smaller models and tasks with smaller inputs can handle larger batch sizes. Next, choose the appropriate learning rate. A rate that’s too high might miss the optimal solution, while a rate that’s too low can slow down the learning process. Use a validation set to fine-tune your learning rate.

Resolving Compatibility and Troubleshooting Issues

If you encounter any compatibility issues or errors while using AutoGPT, the first step is to ensure that your Python and gym versions are compatible with the AutoGPT version installed. If compatibility doesn’t seem to be the issue, consult the documentation or community forums for troubleshooting tips. If the error is due to a lack of computational resources, you may have to upgrade your hardware or move your operations to a cloud-based platform for better scalability. Lastly, if your model isn’t performing as expected, it might be due to suboptimal settings. In this case, revisit the configuration stage and fine-tune the parameters.

Running AutoGPT For Data Processing

For data processing tasks, you can use your installed and configured AutoGPT system. Begin by creating a script to load your data into the system. AutoGPT works best with a large amount of high-quality training data. Use AutoGPT’s tokenizers to format the data in a way the model can understand. Depending on the nature of your data and your specific task, you might need to experiment with different formatting techniques. Next, train your model on your data, using the learning rate and batch size previously determined during the configuration phase. After training, examine and interpret the output of your model to determine whether it was successful.

Optimizing AutoGPT: Regular Updates and Upgrades

AutoGPT, like any software, receives regular updates that bring changes for improving performance, adding new features, or fixing existing bugs. Updating AutoGPT through running the ‘pip install autogpt’ command ensures that you’re working with the latest version. Be sure to review the release notes for any potential compatibility issues to avoid any disruption to your data processing routines.

Data Preparation for AutoGPT

Unveiling the Importance of Data Preparation for Effective AutoGPT Utilization

AutoGPT, which has emerged as a pioneering force in automatic text generation, relies heavily upon data. The superior the data quality, the more efficient this language modelling engine operates. However, one cannot expect superior outcomes by merely inputting random or unstructured data into AutoGPT. This is where the role of data preparation pops up – it is all about structuring and organizing your data to be optimally utilized by AutoGPT. This paramount beginning process ensures a seamless and accurate downstream data processing phase.

Fundamental Protocols for Streamlining Data for AutoGPT

Effective data pre-processing, incorporating aspects like sanitization, formatting, and importing, is critical before deploying AutoGPT.

Data Sanitization

Data sanitization primarily involves eliminating or correcting any flawed, corrupt, poorly formatted, redundant, or irrelevant chunks of data from your datasets. By doing this, the probability of errors, inconsistencies, or biases affecting downstream processes or result quality is significantly reduced.

Data Formatting

For AutoGPT, data formatting relates to tailoring the data to suit the model’s requirements. This synchronization ensures a smooth flow of data transformation. Additionally, uniformity in data formatting aids in results replication and contrasting of different data sets.

Data Importing

Prior to pumping the data into the system, it is crucial to have it in an acceptable file format. Efficiently importing appropriately formatted files into AutoGPT paves the way for a smooth data processing journey.

The Significance of Enhanced AutoGPT Utilization for Data Processing

Considering AutoGPT’s extensive application in areas like content creation, idea generation, predictive analytics, language translations, and more, its optimal usage carries significant implications. Robust data preparation comes as an essential necessity for companies that deploy AutoGPT for business intelligence insights. Properly ly prepared data promises not just accurate outcomes but also accelerates decision-making, facilitates results replication, and enables easy comparability across different datasets.

Consistent Learning and Evaluation: Crucial for Maximum Utilization

Attaining optimal AutoGPT usage demands thorough data sanitization, formatting, and importing. Nonetheless, achieving this goal does not come in a snap. It demands regular practice, learning from mistakes, adaptations – particularly given that AutoGPT perpetually evolves through regular updates. Constant review of how data is processed and where potential improvements lie, is key to unleashing the full potential of AutoGPT to meet your data processing demands.

Applying AutoGPT for Data Processing

Decoding the Optimization of AutoGPT for Efficient Data Processing

AutoGPT or the Automatic General Purpose Transformer, is a powerful tool in the data processing universe. Admired for its adaptability to varying data types, larger model sizes, and high-performance capabilities, AutoGPT represents a new bridge in data computing. It leverages forward-looking machine learning strategies and a Transformer-based neural network architecture to facilitate effective and efficient data processing and analysis.

Running Queries

Operating AutoGPT involves running queries, which essentially means instructing the tool to perform specific tasks. Queries in AutoGPT are flexible and can be adjusted according to the nature and complexity of the task. The tool accepts commands in natural language, increasing user-friendliness and simplifying interaction. For example, a user might input a command like, “summarize the main points of this dataset,” and AutoGPT will respond accordingly.

Analysis of Results

On completion of each query, AutoGPT generates results that need to be reviewed and interpreted. The tool’s output data can be numerical figures, graphs, or strings of text, depending on the task performed. These results may pertain to trends in the dataset, predictions based on the data, or summaries of complex narratives embedded in the data. The key is to critically analyze these results and derive meaningful insights.

Customizing Process Flows

One of the key benefits of using AutoGPT for data processing is the ability to customize process flows. Unlike many rigid models, AutoGPT gives users the freedom to dictate the data processing flow. This means that users can tailor the tool to their needs, optimizing its performance based on specific requirements and preferred methodologies. For instance, a user might prioritize quick results over extensive data exploration or vice versa. In either case, AutoGPT can be configured to deliver.

Optimisation Techniques

Optimizing AutoGPT for data processing involves several strategies. One of these includes clean and organized data input. Since the quality of the output heavily depends on the quality of the input data, it’s vital to ensure the data fed into the system is clean, well-structured, and as comprehensive as possible.

Another optimization technique involves properly tuning the AutoGPT model, which involves adjusting parameters such as learning rate, batch size, number of training steps, and the number of layers in the neural network. By effectively tuning these parameters, users can significantly enhance the performance of AutoGPT in data processing tasks.

Integration with Other Tools

AutoGPT can be integrated with other data processing and analytics tools to improve its utilization and increase its efficiency. By complementing AutoGPT’s capabilities with other tools, users can handle more complex data processing tasks and derive more profound insights from their datasets. Integration usually demands some level of technical expertise, so working with a data scientist or a similar professional might be necessary during the integration process.

The Essence of AutoGPT

To effectively leverage AutoGPT for data processing tasks, a strong comprehension of its operational characteristics, meticulous tuning, and its seamless blending with other processing tools is mandatory. Recognized for its robust nature, AutoGPT can dramatically contribute to optimizing data processing tasks.

Advanced AutoGPT Techniques and Optimization

Exploring AutoGPT in Data Processing

Characterized as a cutting-edge tool in the realm of data processing, AutoGPT is adept at undertaking a variety of tasks. It is equipped to perform everything from basic data manipulation to executing sophisticated machine learning algorithms. The tool’s potency is attributed to its ability to learn patterns and subsequent application of these learned patterns to unfamiliar data. By optimizing a series of processing actions, it significantly enhances the performance of the whole system.

Improve Performance through Parameter Tuning

Parameter tuning is a fundamental aspect of optimizing AutoGPT. Modifying the software’s learning rate, batch size, and number of epochs can result in substantial performance improvements. Testing different parameter configurations can help users discover an optimal setting that balances processing speed, accuracy, and computational resource usage.

Deploy Parallel Processing for Large Data

Large datasets often present unique challenges for data processing. By leveraging AutoGPT’s parallel processing abilities, users can overcome bottlenecks caused by data volume. Splitting data into smaller subsets, distributing them across different processing units, and then aggregating the results can significantly speed up data processing times.

Reduce Errors through Data Preparation and Cleanup

Errors in data processing can potentially lead to inaccuracies in the end outcomes. To reduce error margins, prepare your data meticulously before feeding it into AutoGPT. The software’s built-in data cleanup tools can be deployed to identify and correct inconsistencies, outlying values, and missing data points, ensuring high-quality input for your data analyses.

Integrate AutoGPT with Other Components

AutoGPT can be integrated with other software tools and languages. For instance, it can be coupled with Python programming language and its libraries to create a superior and more streamlined data processing ecosystem. This integration makes it easier to visualize data, present outputs, and perk up data processing workflows.

Use Data Sampling Techniques to Speed Up Data Processing

When processing extremely large datasets, data sampling can significantly improve the speed and efficiency of AutoGPT. Rather than processing the entire dataset, AutoGPT can use a representative sample to generate insights. This technique reduces computational requirements and speeds up data processing times without compromising the quality or accuracy of the data analysis.

Implement Regular Software Updates

Maintaining the latest version of AutoGPT is crucial for optimizing its usage. Updates often come with advanced features and bug fixes that can improve the software’s stability, functionality, and performance. Regular software updates also enhance security by patching potential vulnerabilities.

Automate Repetitive Tasks for Increased Efficiency

AutoGPT’s automation capabilities can be utilized to speed up repetitive data processing tasks. By automating tasks, you reduce the necessity for manual intervention, thus minimizing human error, boosting efficiency, and saving valuable time and resources.

In conclusion

Optimizing AutoGPT for data processing requires a thoughtful combination of parameter tuning, parallel processing, data cleanup, integration with other components, data sampling, regular updates, and automation. By applying these strategies, you can be certain to amplify AutoGPT’s potential and enhance your data processing tasks.

Mastering AutoGPT and fully exploiting its potential can streamline your data processing tasks significantly, boosting your productivity, reliability and overall efficiency. It’s worth understanding that getting the most out of this tool, however, not only requires technical know-how but also requires a strategic approach, from setting up the software correctly, preparing and formatting the data appropriately, to understanding the advanced techniques and optimization strategies. While AutoGPT brings along immense benefits and possibilities, it’s your understanding and application that will truly unlock its potential. Therefore, equip yourself with these insights and understandings, and step into the world of meticulous data processing with AutoGPT.