As we stand on the brink of a technological renaissance, neural networks emerge as the cornerstone of a transformative era in computation and artificial intelligence. Engrained with the complexity and adaptable finesse of the human brain’s structure, neural networks have transcendental potential to learn, evolve, and ultimately, revolutionize our interaction with the digital world. From their inception, rooted in the interplay of biology and mathematics, these computational models have undergone an extraordinary evolution. This essay ventures through the intricate maze of neural network fundamentals, guiding you through the mathematical sinews that empower these systems – their neuron-like interconnectedness, elegant activation functions, and the sophisticated dance of algorithms that constitutes their learning ability. We traverse the technical landscape with a lens focusing on the realms where algebra intertwines with calculus, providing a comprehensive foundation for understanding the current and future state of neural network technology.

Fundamentals of Neural Networks

The Fundamental Architecture of Neural Networks: An Exploration

Understanding the core principles and architectures defining neural networks is akin to delving into the robust framework of the human brain’s information-processing system. Neural networks, a pinnacle of human ingenuity in artificial intelligence, serve as a profound mimetic of biological neural networks. Through elucidating the essence of their structure, we unveil the mechanics behind their impressive capabilities.

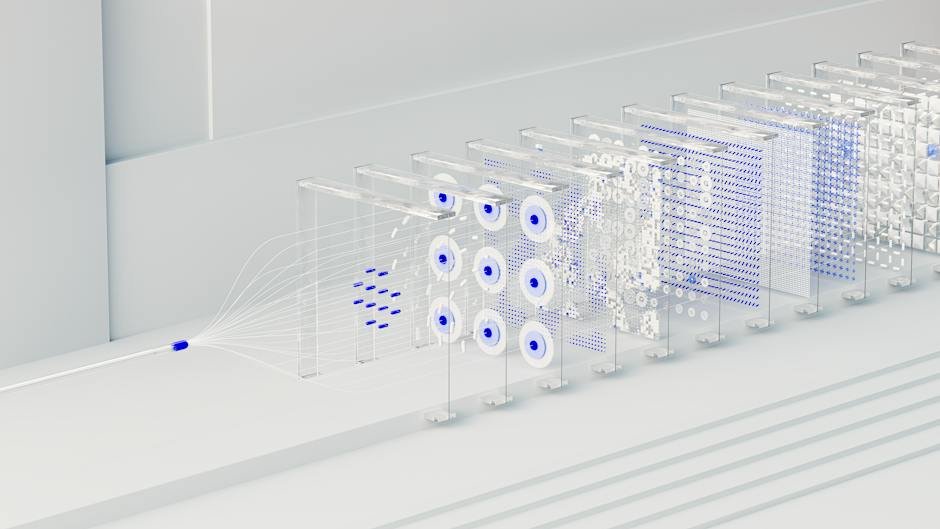

At their most elementary level, neural networks are composed of layers of artificial neurons – nodes or units that mirror the function of biological neurons. These units are interconnected in a graph-like structure and operate by receiving, processing, and transmitting information. Their primary endeavor is to approximate functions that can correlate input data with corresponding outputs.

Diving deeper into the architecture, three primary layers pave the foundation of a neural network. The input layer acts as the receptor of initial data. Interposed are hidden layers, which consist of numerous neurons that process the input through weighted connections and bias. Concluding the procession is the output layer, delivering the final inference or prediction.

These neurons within neural networks are pillars of computational intellect. They receive input values, weight them according to learned associations, and employ activation functions to determine the neuron’s output. An activation function is a mathematical equation that decides whether a neuron should be activated or not, contributing to the network’s ability to capture non-linear complexities.

Learning in neural networks transpires through a back-propagation algorithm, a refined tool allowing computers to adjust the weight of connections based on the error rate of outputs compared to expected results. Accompanied by a gradient descent optimization algorithm, the network iteratively reduces the error, refining its performance.

Various architectures exist, each with particular advantages and applications. Feedforward neural networks consist of unidirectional connections where information moves forward from the input to the output layers. Convolutional Neural Networks (CNNs), tailored for visual tasks, utilize layers that process data in a grid-like topology, excelling in recognizing patterns in images.

Recurrent Neural Networks (RNNs), in contrast, possess loops that enable the persistence of information, making them suitable for sequential data such as natural language or time series. More evolved forms, like Long Short-Term Memory networks (LSTMs), overcome limitations of RNNs by better retaining information over long sequences.

Neural networks, in conclusion, are the harbingers of transformative computational methodologies, exhibiting an extraordinary capability for pattern recognition, data classification, and predictive modeling. By dissecting their core principles and architectures, we traverse closer to the ever-evolving frontier of artificial intelligence – an endeavor that perpetually broadens the horizon of what machines are capable of understanding and accomplishing.

Training and Optimization

Training and Optimizing Neural Networks: The Path to AI Efficiency

In the quest to harness the vast capabilities of neural networks, the practical centerpiece of this endeavor lies in their training and optimization. The efficacy of these artificial synapses hinges on rigorous methodologies that refine their ability to decipher and learn from complex data. Crucial to this process are the strategies employed in fine-tuning these networks to perform tasks with profound accuracy.

The Principle of Backpropagation

Central to the training of neural networks is the backpropagation algorithm, a sophisticated method that meticulously adjusts weights within the network. Following each output, backpropagation computes gradients systematically to minimize the error margin. Think of it as a meticulous teacher, guiding the network to learn from its mistakes by understanding which connections contributed to the error and making slight adjustments in the right direction.

Cost Functions: The Success Metric

Every neural network aims to minimize its mistakes, often quantified by cost functions. These mathematical tools gauge the difference between the anticipated output and the actual result produced by the network, aggregating the errors into a singular measure. The goal is to continuously tweak the neural network to lower the cost function’s value – a lower score signifies improved performance and better learning.

Data Division: Learning from Variety

To effectively train a neural network, the data is usually split into distinct sets: training, validation, and testing. The training set is the main resource for learning, allowing the network to establish correlations and patterns. The validation set helps fine-tune the model parameters, preventing overfitting, which occurs when a model learns the details and noise in the training data to the extent that it negatively impacts the performance of the model on new data. Lastly, the testing set provides an unbiased evaluation of a final model fit to gauge its real-world applicability.

Regularization: The Guard Against Overfitting

Striking a delicate balance between sufficiently learning from the data and not memorizing it is the art of regularization. Techniques such as L1 and L2 regularization penalize larger weights in the network, simplifying the model to only capture the most significant features in the data. Another method, dropout, involves the random deactivation of neurons during the training phase, thereby encouraging a more robust and distributed internal representation of the data.

Optimizers: Steering the Learning Process

Optimizers are the navigators of the neural network training journey. With the aim to expedite and refine the learning process, various optimization algorithms like Stochastic Gradient Descent (SGD), Adam, and RMSProp dynamically adjust the learning rate. They ensure that the network learns fast enough to be efficient, yet slow enough to avoid overshooting the minimum point of the cost function.

Hyperparameter Tuning: Dialing in Success

The sophistication of a neural network’s performance is often attributed to the meticulous tuning of hyperparameters. These external configurations, including learning rate, batch size, and network architecture, are manually adjusted to optimize performance. Trial-and-error, grid search, random search, and sophisticated methods like Bayesian optimization are used to navigate the extensive landscape of hyperparameter combinations.

Transfer Learning: Leaping Forward

In certain scenarios, neural networks can take a shortcut through transfer learning. By utilizing a pre-trained network on a massive dataset, researchers can adapt it to a similar, but less voluminous, set of data. This method leverages prior knowledge, thereby reducing the amount of computation and data required for training.

In conclusion, training and effectively optimizing neural networks is a multi-faceted feat that requires a meticulous blend of mathematical understanding and strategic experimentation. It involves a cycle of hypothesis, experimentation, and learning that gradually enhances the network’s ability to sift through data and extract meaningful insights. As the field progresses, advancements in training methodologies continue to push the boundaries of what is possible in artificial intelligence, further entrenching neural networks as a linchpin of technological innovation.

Applications of Neural Networks

Neural Networks: Pioneering Progress in Diverse Fields

Neural networks are, without a doubt, one of the more riveting advancements in artificial intelligence, demonstrating a capacity to fundamentally transform a wide range of scientific and industrial domains. These intricately woven architectures, modeled after biological networks found in living organisms, are progressively becoming the cornerstone of innovation across various fields due to their unparalleled ability to learn from data.

One of the most profound impacts of neural networks can be observed in the arena of healthcare. The complex algorithms that underlie these computational systems are adept at discerning patterns within vast datasets — a skill that is particularly advantageous in medical diagnostics. Neural networks are instrumental in enhancing the accuracy of diagnoses, notably in the detection of nuances in imaging data such as MRI scans and X-rays, which might elude even the most trained human eye.

In the realm of automatons and robotics, neural networks provide the essential cognitive capabilities that are propelling these machines towards greater autonomy. Through sophisticated recognition patterns and sensor data interpretation, robots are gaining improved situational awareness and decision-making skills. These advancements are witnessing substantial utilization in manufacturing, where robotic systems equipped with neural networks are streamlining production processes, thus magnifying efficiency and safety.

Another sphere witnessing the transformative effect of neural networks is finance. These models are utilized to predict market trends and movements with a degree of precision that was once thought to be unattainable. The ability of neural networks to analyze enormous data sets, including historical prices and economic indicators, extends to traders and financial institutions a competitive edge by providing them forecasts and risk assessments grounded in data.

Moreover, the communications sector is reaping benefits from these intelligent systems as well. Neural networks are employed in natural language processing to enhance language translation services and voice recognition systems. This influence is not only refining how devices interpret human speech but also how information is disseminated across languages and cultures, thus fostering global connectivity.

In transportation, neural networks are central to the development of autonomous vehicles. These self-driving cars rely on a symphony of algorithms to process sensor data and make instant decisions, a complex feat that ensures safety and operational reliability. The networks’ proficiency at adapting to new scenarios means that each journey contributes to a large knowledge reservoir, which can be tapped to further refine the autonomous systems.

The applications of such networks are not confined to the practical and tangible; they have also ignited a revolution in creative fields. In the realm of art and design, neural networks are used to generate new forms and patterns, sometimes even creating artwork that is indistinguishable from that of human artists. These algorithms have unlocked new modes of human-machine collaboration, inviting novel perspectives on creativity.

In conclusion, the effects of neural networks are reverberating across sectors, heralding advances that are not only refining existing systems but also paving pathways into uncharted territories. Whether it is through enhancing human longevity, advancing economic stability, or enriching cultural exchange, the applications of neural networks are vast, diverse, and deeply ingrained in the tapestry of contemporary progress. The continuous integration of these systems across various disciplines promises a future replete with innovation, driven by an intelligent network of artificial cognition.

Evolving Neural Network Architectures

Advances in neural network architectures continue to propel the field of artificial intelligence forward with astounding speed. One area of marked progress is in the domain of attention mechanisms, particularly the Transformer model. This architecture excels at processing sequential data, far surpassing traditional recurrent models in tasks that require understanding context, such as language translation.

Another leading architecture is the Generative Adversarial Network (GAN). Through a competitive process between two neural networks—a generator and a discriminator—GANs excel at synthesizing incredibly realistic images and media. Their utility spans from the creation of art to aiding in the realism of simulations.

The capsule network, while not as widely implemented as other models, offers a promising solution to the limitations of convolutional neural networks (CNNs) in understanding spatial hierarchies. Instead of processing input data in one layer, capsule networks use groups of neurons, or capsules, that maintain information about the entity’s various properties and pose, facilitating the network’s ability to recognize objects in different orientations and compositions.

Spiking neural networks (SNNs) mimic the way real neurons function, with individual neurons firing in a discrete fashion. This bio-inspiration provides a pathway toward more efficient forms of computation, especially in hardware implementations known as neuromorphic computing. SNNs show great promise in areas where energy efficiency is paramount.

Neural Architecture Search (NAS) is the automated design of neural networks, where algorithms determine the optimal structure for a given task. This can greatly reduce the manual effort involved in designing a neural network and has the potential to uncover new, superior architectures.

Graph neural networks (GNNs) have emerged as a unique approach to handle structured data. They capture relationships and interconnectivity, effectively modelled as graphs. This makes them ideal for complex tasks like social network analysis, recommendation systems, and molecular chemistry, where the data have a natural graph structure.

In summary, the latest architectures in neural network design are characterized by their diversity and the innovative means by which they address specific limitations of earlier models. These developments herald a future where neural networks become even more intertwined with various sectors, driving efficiencies, and enabling advancements that once seemed the realm of science fiction. With continued research and refinement, neural network architectures will keep expanding the horizons of artificial intelligence.

Ethical Implications and Future Prospects

Ethical Considerations and Future Prospects Surrounding Neural Networks

Neural networks, as an intrinsic component of machine learning and artificial intelligence, are accelerating advancements in myriad sectors. Yet, ethical considerations and future prospects merit rigorous examination to navigate the intricate web of benefits and potential risks associated with these powerful tools.

Ethical Dichotomy in Machine Learning

A primary ethical dilemma resides in the fabric of the data driving neural networks. Bias, whether inadvertently introduced by skewed datasets or underlying human prejudices, can perpetuate or exacerbate discrimination. Responsible data curation and algorithm auditing are crucial to ensuring that the decisions, categorizations, or recommendations made by neural networks do not disadvantage certain groups or individuals. It is the responsibility of developers and researchers to enforce fairness, transparency, and accountability in neural network deployments.

Privacy and Neural Networks

The voracious appetite of neural networks for data raises significant privacy concerns. The extraction of insights from vast datasets can lead to erosion of anonymity, often without the explicit consent or knowledge of the data providers. Guarding against unauthorized use and ensuring data protection are paramount to maintaining trust and privacy in the era of big data.

Autonomy and the Role of Neural Networks

As neural networks become increasingly sophisticated, the autonomy granted to these systems engenders ethical quandaries. The delegation of critical decisions to machines—such as in medical diagnostics or autonomous vehicles—requires an infallible trust in the reliability and judgement of these algorithms. Determining the appropriate level of human oversight is essential to mitigate the risks of abdicating control to artificial agents.

Future Prospects in Ethical Algorithm Design

The foreseeable future points toward an amplified integration of neural networks into societal frameworks. The adoption of ethical guidelines and the development of models sensitive to ethical dimensions will be crucial. Innovations such as explainable AI (XAI), which seeks to make the decision-making processes of neural networks transparent, and ethical AI frameworks designed to align machine learning applications with human values, are likely to shape the trajectory of future research and development.

Assuring Robustness and Security

Alongside ethical imperatives, the robustness and security of neural networks against adversarial attacks must be a research priority. Ensuring the integrity of neural network outputs against malicious tampering or data corruption is central to sustaining confidence in systems spanning from cybersecurity to public safety.

The Future Landscape of Neural Network Integration

Looking ahead, the ongoing refinement of neural network technology is anticipated to precipitate transformative changes, spanning from enhanced climatological models predicting natural disasters to advanced language models enabling seamless real-time translation and communication. The potential to further individual and collective well-being is vast, but a concurrent commitment to ethical foresight is indispensable.

In summation, neural networks stand at the nexus of unparalleled opportunity and significant ethical responsibility. As architects and custodians of these systems, researchers, developers, and policy-makers must diligently foster an equilibrium between innovation and the safeguarding of societal mores and individual rights. The enduring quest for this balance will indubitably shape the profound impact of neural networks on the future trajectory of civilization.

The dynamism of neural networks is undeniable, poised to redefine what is possible within the sphere of machine learning and beyond. As we dissect the ethical quandaries entangled in the fabric of this technocentric future, we are compelled to navigate the labyrinth of moral and societal responsibilities that accompany such profound advancements. Through the perceptive analysis of evolving architectures and the contemplation of the roads less travelled by current regulations, neural networks offer a mirror reflecting our collective aspirations and challenges. They encapsulate the quintessence of innovation while demanding vigilance in their stewardship. As agents of change, neural networks beckon us toward a horizon rich with complexity, opportunity, and the unyielding pursuit of knowledge that propels humanity forward. Therein lies the promise and the indispensable wisdom in steering the course of this digital odyssey with conscientious governance, ensuring that the future we sculpt is as inclusive and beneficent as the technology itself is brilliant.