Neural networks and TensorFlow are key tools in machine learning, simplifying complex processes and transforming raw data into actionable insights.

Introduction to Neural Networks

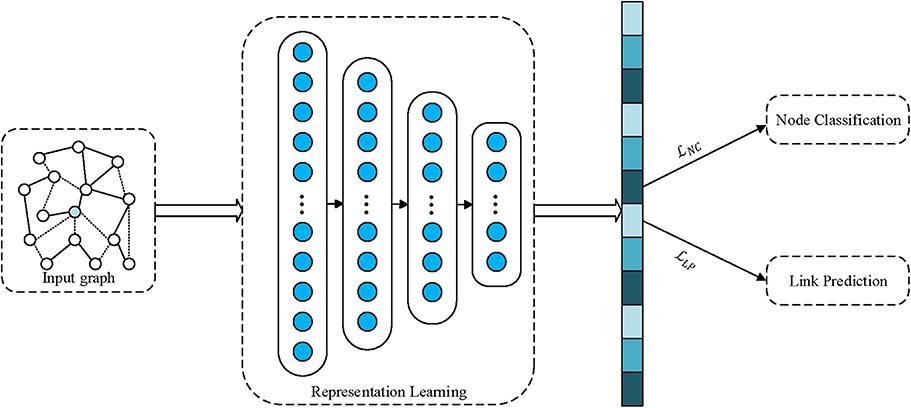

Neural networks are computational models inspired by the human brain, designed to recognize patterns and learn from data. They consist of interconnected layers: input, hidden, and output.

The input layer collects and forwards raw data. Hidden layers process this data, with neurons assigned weights that are fine-tuned during training. Each neuron's output passes through an activation function, such as ReLU, which determines if the neuron should activate.

The output layer produces the final result, often using activation functions like softmax to generate probabilities for classification tasks.

Neural networks have diverse applications, including:

- Voice recognition

- Self-driving cars

- Medical image analysis

Their adaptability makes them suitable for various challenges in data processing and decision-making.

TensorFlow Basics

TensorFlow is a framework for building and training neural networks. Its core components include:

- Tensors: Multi-dimensional arrays representing data in the network

- Operations: Tasks that manipulate these tensors, such as mathematical functions

- Data flow graphs: Visual links between operations, defining the pathway for data transformation through the network

TensorFlow's structured approach simplifies the construction of complex neural networks, making it a popular choice among developers for tasks like language translation, image recognition, and predictive analytics.

Building Neural Networks with TensorFlow

TensorFlow's Keras API provides a straightforward way to build neural networks. Here's a basic process:

- Define the model structure:

import tensorflow as tf model = tf.keras.models.Sequential([ tf.keras.layers.Flatten(input_shape=(28, 28)), tf.keras.layers.Dense(128, activation='relu'), tf.keras.layers.Dropout(0.2), tf.keras.layers.Dense(10) ]) - Compile the model:

model.compile(optimizer='adam', loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True), metrics=['accuracy']) - Train the model:

model.fit(x_train, y_train, epochs=5) - Evaluate the model:

test_loss, test_acc = model.evaluate(x_test, y_test, verbose=2) print(f'Test accuracy: {test_acc}')

This process allows for efficient experimentation with different architectures and hyperparameters to optimize model performance.

Convolutional Neural Networks (CNNs)

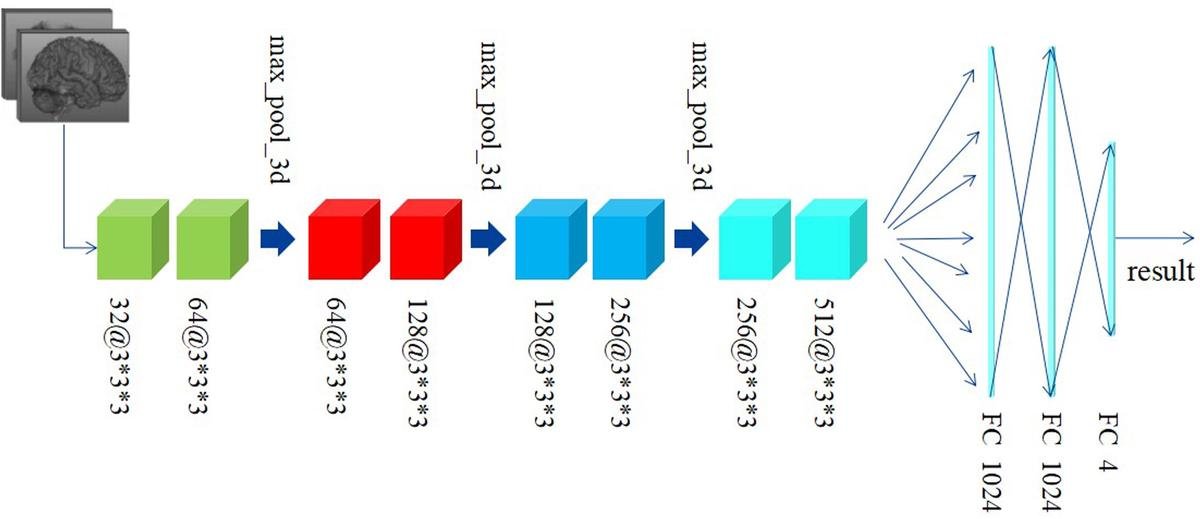

Convolutional Neural Networks (CNNs) are specialized for processing visual data. They use convolutional layers with filters to extract feature maps from images, capturing elements like edges, textures, and colors.

CNNs typically include:

- Convolutional layers for feature extraction

- Activation layers (often ReLU) to introduce non-linearity

- Pooling layers to reduce dimensionality

- Fully connected layers for final classification

This architecture makes CNNs effective for tasks like image recognition, facial recognition, and medical image analysis. They're also crucial in autonomous vehicle technology for object detection and environment interpretation.

Evaluating and Optimizing Neural Networks

Evaluating neural networks involves metrics such as accuracy, precision, and recall. These can be implemented using TensorFlow's metrics module:

from tensorflow.keras.metrics import Precision, Recall

precision = Precision()

recall = Recall()

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy', precision, recall])Optimization techniques include:

- Hyperparameter tuning: Adjusting learning rates, batch sizes, and epochs

- Regularization: Using methods like L1/L2 regularization or dropout to prevent overfitting

- Data augmentation: Artificially expanding the dataset to improve generalization

- Transfer learning: Fine-tuning pre-trained models for specific tasks

Continuous monitoring and updating of models is necessary to maintain performance as datasets or problem domains change.

Neural networks offer adaptable solutions for various applications in technology. By refining these models, we can leverage their capabilities to address evolving technological challenges.

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436-444.

- Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016.

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems. 2012;25:1097-1105.