Understanding Generative Adversarial Networks

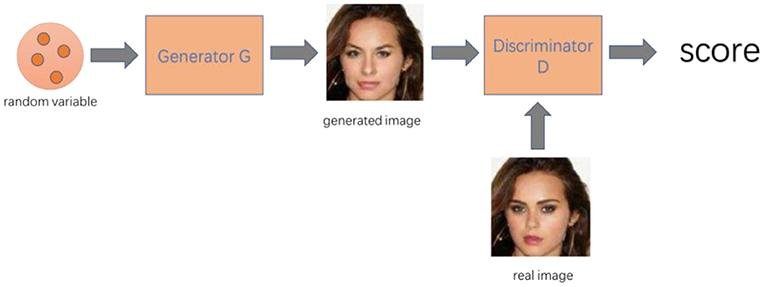

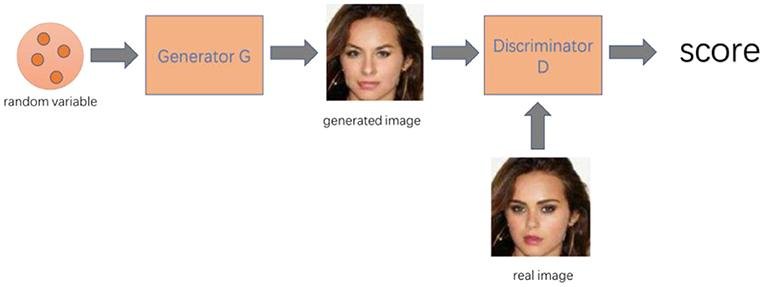

Generative Adversarial Networks (GANs) consist of two key components: the generator and the discriminator. The generator creates fake data that closely resembles real-world samples, starting from random noise and using neural networks to craft increasingly realistic output. The discriminator, on the other hand, evaluates the data it receives and determines whether it is real or fake.

During training, the generator and discriminator engage in a constant battle. The generator aims to fool the discriminator by producing convincing fake data, while the discriminator works to sharpen its detection skills. This adversarial process drives both networks to continuously improve, resulting in the generation of highly realistic data over time.

The loss functions guide this training process. The generator’s loss function encourages it to create more convincing data, while the discriminator’s loss function focuses on improving its ability to distinguish between real and fake samples. The feedback loop between the two networks enables them to learn and refine their capabilities with each iteration.

GANs have found applications in various domains, such as:

- Image synthesis

- Data augmentation

- Image resolution enhancement

- Creative tasks like art generation and style transfer

The competitive interaction between the generator and discriminator pushes the boundaries of what is possible in artificial intelligence, leading to remarkable advancements in data generation and manipulation.

Key Components of GAN Architecture

The generator is the core component of the GAN architecture, responsible for creating synthetic data. It takes a random vector as input and, through a series of transformations, generates data samples that closely resemble authentic ones. The generator aims to map the input noise to the desired output seamlessly, much like an artist refining a concept into a convincing masterpiece.

The discriminator works alongside the generator, acting as a thorough examiner. It receives data from both the real dataset and the generator, and its task is to assign a probability indicating the authenticity of each sample. The discriminator’s ability to distinguish between genuine and counterfeit data is crucial for the training process.

The adversarial training loop is the backbone of the GAN framework. During each iteration, the generator produces fake samples, which are then fed into the discriminator along with real data. The discriminator provides feedback, and this loop continues, allowing both networks to evolve and adapt over time. As the generator becomes better at fooling the discriminator, the discriminator improves at detecting fakes, creating a cycle that drives both networks towards excellence.

Loss functions play a vital role in guiding the training of GANs. The generator’s loss function measures its ability to create believable data, aiming to minimize the difference between real and generated samples. The discriminator’s loss function, on the other hand, drives it to accurately classify real and fake data. By optimizing these functions, the GAN iteratively refines the performance of both networks.

High-quality training data is essential for the success of GANs. A diverse and representative dataset serves as the foundation for both the generator and the discriminator to learn from. The real data acts as the benchmark against which the generator’s outputs are evaluated, and the quality and variety of the training data directly impact the robustness and generalization ability of the resulting GAN model.

The optimization algorithm is the driving force behind the training process, adjusting the neural networks’ weights to minimize the loss functions. Algorithms such as stochastic gradient descent (SGD) or its variants, like Adam, are commonly used. These algorithms iteratively update the parameters, ensuring that each step taken during training moves the networks closer to their objectives. Effective optimization is crucial for the speed of convergence and the stability of the GAN.

Each component of the GAN architecture – the generator, discriminator, adversarial training loop, loss functions, training data, and optimization algorithm – plays a vital role in creating a system where the interplay between creation and critique leads to the generation of remarkably realistic data. This synergy is what makes GANs a powerful and transformative technology in the field of artificial intelligence.

Applications of GANs

GANs have found diverse applications across various domains, showcasing their versatility and potential for impact.

Data Augmentation

One key application is data augmentation, where GANs contribute to expanding the volume and diversity of training datasets. In the medical field, for example, generating synthetic medical images can supplement limited available data, enabling better-trained models for disease diagnosis. This is particularly valuable in scenarios involving rare diseases, where obtaining sufficient data is challenging.1

Super-Resolution Imaging

In the realm of super-resolution imaging, GANs play a significant role in transforming low-resolution images into high-resolution counterparts. This application is evident in enhancing satellite imagery for detailed earth observation, such as mapping urban development or monitoring environmental changes. Super-resolution GANs fill in the fine details, providing clearer and more actionable images that are valuable in fields like environmental science and urban planning.2

Anomaly Detection

Anomaly detection is another area where GANs excel. By learning the distribution of normal data, GANs can identify deviations that indicate anomalies. This capability is particularly useful in cybersecurity, where GANs help in detecting fraudulent activities and intrusions. For instance, in credit card fraud detection, a GAN trained on normal transaction data can spot irregularities, providing an effective layer of security and helping mitigate risks in real-time.3

Domain Adaptation

GANs also demonstrate their strength in domain adaptation, facilitating the transfer of knowledge from one domain to another. This is crucial in tasks like autonomous driving, where models trained in simulated environments can be adapted to real-world conditions. GANs can bridge the gap between synthetic and real-world data, enabling models to perform effectively in varied and complex real-world scenarios.4

Data Privacy and Generation

The application of GANs in data privacy and generation is groundbreaking. By generating synthetic data that retains the statistical properties of real data, GANs ensure privacy and security. This is valuable in healthcare, where patient data privacy is paramount. GANs can generate realistic but synthetic patient records for training machine learning models without compromising patient confidentiality, enabling researchers to develop healthcare models without accessing sensitive personal information.5

Real-World Examples

Real-world examples highlight the transformative impact of GANs:

- Researchers at MIT have leveraged GANs to create photorealistic 3D models for applications in architecture and video games.

- In retail, brands like H&M use GANs to design new fashion merchandise, blending innovation with consumer preferences.

- Nvidia’s StyleGAN continues to impress with its ability to generate lifelike human faces, pushing the boundaries of digital art and creative industries.

The versatility of GANs extends beyond these applications, showcasing their potential to revolutionize various sectors by enhancing data quality, improving model training, and ensuring data privacy. The dynamic interplay between creation and critique within GANs propels advancements in artificial intelligence, positioning them as a cornerstone technology poised to drive future innovation.

Challenges and Limitations of GANs

Despite their impressive capabilities, working with GANs presents a set of challenges and limitations.

Training Instability

One notable issue is training instability. GAN training involves a delicate balance between the generator and the discriminator. If one network outpaces the other, the resulting model can be unbalanced. For instance, if the discriminator becomes too good too quickly, the generator may receive minute gradients that are not helpful in learning to produce more realistic data. Conversely, if the generator outpaces the discriminator, the latter may fail to provide accurate feedback, rendering the generator’s progress ineffective.6

Mode Dropping

Another challenge is mode dropping, where the generator fails to capture the entire range of variability in the data, resulting in repetitive or missing patterns. For example, if a GAN is trained to generate human faces, mode dropping might lead it to produce faces of only certain ethnicities or ages, thereby losing the diversity seen in the real data distribution. This occurs when GANs find it easier to focus on a subset of the data distribution that it can generate well, neglecting other modes.7

Hyperparameter Sensitivity

GANs are also highly sensitive to hyperparameters. Parameters such as learning rates, batch sizes, and network architectures critically influence the performance and stability of GANs. Small changes in these parameters can lead to vastly different outcomes. Inappropriate learning rates can cause the networks to oscillate or converge prematurely, while inadequate batch sizes might not adequately represent the data distribution, leading to poor model performance. This sensitivity necessitates extensive experimentation and fine-tuning, often requiring expert knowledge and significant computational resources.8

To mitigate these challenges, several strategies can be employed:

- Advanced initialization and regularization techniques, such as spectral normalization and batch normalization, can help stabilize GAN training.

- Improved architectures like Wasserstein-GAN (WGAN) or Least Squares GAN (LSGAN) modify the loss functions to promote smoother gradients and better convergence properties.

- The Two-Time-Scale Update Rule (TTUR) adjusts the learning rates of the generator and the discriminator independently to maintain balance between the two networks.

- Dynamic training schedules, such as progressively growing GANs, where network layers are added incrementally, can reduce training instability by allowing the networks to gradually learn more complex representations.

- Monitoring tools to track the distributions of outputs and adaptive techniques, such as learning rate annealing or early stopping, can help in dynamically responding to signs of instability or mode collapse during training.

By understanding and addressing these challenges, researchers and practitioners can enhance the efficacy and reliability of GANs, making them more robust for a broader range of applications. While these strategies do not completely eliminate the challenges, they significantly reduce their impact, enabling more stable and effective GAN training.

Future of GANs in AI

Advancements in GAN Technology

Future advancements in GAN technology are likely to address the current challenges of training instability and mode dropping. Researchers are continually exploring enhancements in network architectures and loss functions to create more stable and effective models. One emerging development is the introduction of self-attention mechanisms and transformers within GAN architectures. Self-attention allows the model to focus on different parts of the input data more effectively, improving the generation quality and capturing more intricate details. This is particularly beneficial for applications requiring high fidelity, such as fine art creation and intricate medical imaging.

Additionally, hybrid models that combine GANs with other machine learning techniques, such as Reinforcement Learning (RL) and Variational Autoencoders (VAEs), are surfacing. These hybrid approaches aim to leverage the strengths of various models to overcome the limitations of each. For instance, integrating RL with GANs can help the generator receive more structured rewards, improving the quality and diversity of the generated data. Meanwhile, GANs augmented with VAEs can better learn latent spaces, enhancing the model’s ability to generate more complex and realistic samples.

Emerging Applications

The horizon for GAN applications is expanding rapidly. One particularly promising area is in natural language processing (NLP). GANs are being used to generate coherent and contextually relevant text, thus enhancing machine translation, text summarization, and even creative writing. The potential of GANs in generating high-quality, human-like language opens new avenues for applications in chatbot development and automated content creation, offering personalized and dynamic interactions.

In the field of healthcare, GANs’ application goes beyond medical image synthesis. They are now being explored for drug discovery and development, providing a means to generate novel molecular structures with desired properties. This capability accelerates the process of identifying potential therapeutic candidates, significantly reducing the time and cost involved in drug development.

Another burgeoning application is in augmented reality (AR) and virtual reality (VR). GANs enable the creation of highly realistic virtual environments, improving the immersive experience for users. This advances the fields of gaming, training simulations, and virtual tourism, where the quality of virtual assets can vastly enhance user engagement and the overall experience.

In the automotive industry, GANs are progressively being utilized for autonomous vehicle training. By generating diverse driving scenarios, GANs facilitate the learning process for self-driving algorithms, ensuring they can handle a wide range of real-world conditions. This approach supplements the data obtained from real-world driving tests, leading to more reliable and autonomous systems.1

Impact on Various Industries

The potential impact of GANs on various industries is significant. In media and entertainment, GANs are revolutionizing content creation, enabling the production of lifelike graphics, special effects, and even virtual actors. This has cost-saving implications and allows for creative possibilities previously deemed impossible.

In the financial sector, GANs are enhancing fraud detection mechanisms by generating synthetic fraud scenarios, helping financial institutions train their detection systems more effectively. This proactive approach to spotting anomalies ensures that systems remain robust against evolving fraudulent tactics.

The fashion industry benefits from GANs through automated design and trend prediction. By analyzing fashion trends and generating new designs, GANs help designers stay ahead of the curve, blending creativity with data-driven insights.

Research and Development Efforts

Ongoing research and development efforts focus on improving GAN performance and broadening their applicability. Leading institutions and technology companies are investing in refining GAN algorithms and expanding their use cases. Collaborative research projects are exploring interdisciplinary applications, where GANs intersect with other fields such as biology, astronomy, and materials science.

Researchers are also investigating ways to make GAN technology more accessible to non-experts. User-friendly tools and platforms are being developed to enable artists, writers, and other creatives to harness the power of GANs without requiring deep technical expertise. This democratization is expected to spur innovation across various domains, bringing fresh perspectives and novel applications.2