When it comes to groundbreaking innovations in artificial intelligence and machine learning, OpenAI’s Dall-e is an impressive exemplar. This advanced machine learning model demonstrates the transformative potential of AI, with its unique ability to generate images purely based on textual descriptions. Eclipseing traditional boundaries, Dall-e uses a unique blend of technology, creativity, and large-scale diverse datasets. But what is the science behind this incredible capabacity? This comprehensive guide serves to educate the general public on the operational intricacies of Dall-e, its datasets, potential applications, implications, limitations, and anticipated future developments.

Introduction to Dall-e

Let’s initiate a dialogue about an evolutionary step in artificial intelligence—Dall-E—a model facilitating a whole new paradigm in AI-driven creativity. While we know a lot about AI and its increasing importance in our lives, we must not let these intriguing technical innovations go unnoticed.

Dall-E, an AI model, is an offspring of GPT-3, developed by OpenAI. Named in a nod to the Surrealist artist Salvador Dali and the Pixar animated character Wall-E, Dall-E is trained to generate images from textual descriptions, bringing abstract concepts to visual reality—a significant breakthrough in the field of AI.

Think of Dall-E as GPT-3’s artistic cousin, manipulating a latent space of images rather than words, speaking the language of imagery, and giving form to creative expression. It moves beyond mere object identification, image processing, and generation of photorealistic imagery. Indeed, unveiling Dall-E’s capabilities is essential to understanding how it is revolutionizing the AI landscape.

Dall-E is harnessed with a dataset of text-image pairs using a method known as VQ-VAE-2, a vector-quantized variational autoencoder. This enables Dall-E to generate images from descriptions inputted by users. It bypasses the significant challenge in AI of abstraction and association, showing an understanding far beyond a literal translation.

From rendering imaginative illustrations such as an “armchair in the shape of an avocado” to blending notions like “a radish in a tutu walking a dog”, Dall-E can materialize them, signifying its nuanced understanding and implying a quantum leap in AI advancement.

The implications of this tool for creative and practical applications are vast. In content creation, design, and advertising domains, Dall-E could provide a cost-effective and time-efficient method of generating bespoke, original content or even honing the perfect marketing image. Beyond the commercial, such tools will enable new forms of artistic expression or even function as an assisting tool to help individuals visually communicate who may lack drawing abilities.

However, as an academic community, we need to balance enthusiasm with caution. The evolution of AI technologies like Dall-E raises concerns related to creativity’s ownership, the ethics of AI use, and potential misuse. It becomes incumbent to draft regulations that maintain a balance between fostering creativity and technical advancement and ensuring ethical practices surrounding AI-generated content are adhered to.

In conclusion, Dall-E, in its essence, underscores the merging of disciplines—bringing together AI, language comprehension, computer vision, and human creativity into one fascinating nexus. As we step into an era where AI not only learns from us but also creatively collaborates with us, it is understandable that trepidation accompanies the excitement. However, to witness the unfolding of AI’s potential should be seen as an enthralling period in the grand narrative of human discovery. This is simply the dawn of AI democratizing creativity; the full day holds promise beyond the wildest figments of human imagination.

Photo by andrewtneel on Unsplash

Working Mechanism of Dall-e

Delving further into Dall-E’s intricate architecture, Dall-E is a prodigious progeny of GPT-3, an established language model that came into existence thanks to rigorous machine learning. Like GPT-3, which can generate coherent and meaningful sentences, Dall-E excels in producing coherent and pertinent images based on linguistic prompts. However, it does not merely echo its predecessor’s capabilities in a different domain, but rather progressively upgrades and redefines generative AI, integrating itself within various spheres.

Significantly, Dall-E utilizes a 12-billion parameter version of GPT-3. This immense magnitude allows it to discern the non-literal, metaphorical representations fed to it, thereby outputting rather unconventional yet coherent imagery, merging sundry elements together masterfully. The imaginative prowess of this model is not an arbitrary acquisition but the result of robust training on a diverse array of internet images.

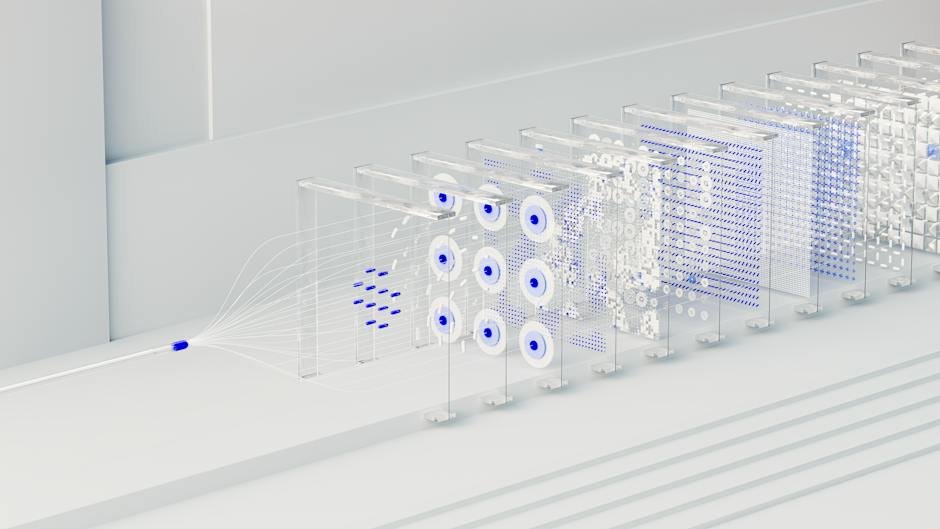

While dissecting the mechanism beneath Dall-E’s sophistication, one cannot bypass the indispensable role of VQ-VAE-2. Image generation by Dall-E is not a direct translation from text to image. Instead, it’s the result of intricate processes involving encoding, decoding, and re-encoding. Once an input is given, Dall-E’s remarkable VQ-VAE-2 processes the given varied-scale images, thereby creating a compressed representation. Extending the capabilities of vanilla VAE, VQ-VAE-2 breaks down images at different scales, allows efficient information flow, and subsequently, capably reconstructs the image.

Pivotal to Dall-E’s success is its periodic application of the transformer architecture, which recognizes and proportions the importance among the associations of various inputs. The model produces groundbreaking images by relating various position-dependent features of the text, interpreting the correlations, and combining these visual elements remarkably.

Dall-E, much like its sibling GPT-3, remains relatively cryptic regarding its internal processing of the textual descriptions. In theory, it pulls from a multitude of potential images, sifting through possible representations till it conjures the most coherent one. However, the ‘how’ of this operation remains obscure, inviting fascinating and potentially groundbreaking challenges to explore.

Examining the ethical implications, while Dall-E prompts debates on intellectual property rights and themes surrounding authentic creation, it also raises concerns in terms of potential misuse. Stringent regulations and guided applications are thus a stringent necessity to prevent imagemaking for nefarious purposes.

Essentially, Dall-E system functions as a harbinger of the next era of AI. Its success in producing ingenious, unpredictable images from textual descriptions places a pedestal for future developments, unifying the domains of AI, language comprehension, computer vision, and even human creativity. Integrating these fields, it levitates the discourse on the limits of AI, paving the avenue to unexplored and uncharted territories.

To conclude, the operative mechanism of Dall-E may not be wholly transparent, but the marvel it instigates through its prowess is clearly palpable. Fitted with an ethereal creativity and executable finesse, Dall-E not only signifies a massive leap in AI capabilities but also implicitly invokes humans to reconsider and perhaps redefine creativity. An era where AI mirrors, extends, and even challenges human imagination, Dall-E carries the flag of visionary possibilities for AI and beyond.

Datasets Utilized in Training Dall-e

In discerning the nature of data utilized for Dall-E’s training, it is essential to comprehend its significance in the larger schema of artificial intelligence. Dall-E, a variant of GPT-3, was engineered using a two-step process that encompasses both unsupervised and supervised learning.

The initial unsupervised learning phase feeds Dall-E an array of seemingly limitless, diverse data without designated end labels. It devours image-text pairs gathered from the internet–an eclectic and expansive amalgam of visual forms and linguistic constructs that lay the foundation for Dall-E’s ability to translate textual prompts into tangible imagery. These pairings are not strictly monitored; instead, they encompass a broad spectrum of online material, offering Dall-E a buffet of information from which to learn.

It is noteworthy, however, that some specifics of the dataset remain less transparent. The exact methodological steps OpenAI undertook to compile and curate this magnitude of data are not entirely elucidated and remain nestled within the internal working mechanisms of Dall-E’s training process. Furthermore, while explicit criteria around the sample size, data source, and nature of these image-text pairs are scant, it is deducible that data diversity is key to Dall-E’s extensiveness in generating out-of-the-box imagery.

What follows the unsupervised learning stage is a round of supervised fine-tuning. At this juncture, Dall-E receives human-guided steerage to sharpen its capacity for generating images that abide the protocols of coherence, relevance, and appropriateness to the corresponding text. The specifics of this human input are, however, proprietary information held closely by OpenAI and are not widely shared or disclosed.

In conclusion, Dall-E imbibes an enormous and varied diet of image-text pairs to hone its skills. It thrives on the undefined boundaries of the internet’s visual and linguistic corpus in the initial phase, only to be refined and redirected by human-guided inputs later. While the precise specifics of this dataset do not find explicit mention, it is amply clear that the potency of Dall-E rests in the sturdy foundation laid by this meticulously crafted two-phase learning process. The rich informational ecosystem that Dall-E is exposed to forms the bedrock of its astounding creative ability to translate the human language into visual constructs with unerring ease and imaginative flair.

Potential Applications and Implications of Dall-e

Venturing into the captivating world of Dall-E’s training process, we unearth an intricate balance of unsupervised and supervised learning. The AI model’s journey commences with an unsupervised exploration of vast image-text corpuses from the internet. In this phase, the AI feeds on innumerable pairs of text and corresponding images, assimilating the visual grammar of the digital milieu. Unfortunately, owing to corporate secrecy, the specifics of such data compilation and curation remain elusive, though the importance of data diversity cannot be understated for the kindle of Dall-E’s creativity.

The initial, unsupervised learning stage ended, Dall-E then entered the crucible of supervised fine-tuning. Abiding by human-specified feedback – the nature of which remains proprietary to OpenAI – the model’s abilities were honed and directed. The impact of this two-phase learning process is significant; it serves as the bedrock for Dall-E’s unparalleled ability to translate textual prompts into intricate visual constructs.

Predictably, the arrival of Dall-E prompts pivotal questions about the trajectory of human-machine interactions, especially in creative fields. The potential applications go far beyond the realms of design and art, permeating areas such as VR/AR (Virtual Reality/Augmented Reality). For instance, the utility in a VR platform could take interactive world-building to a new level. A user could describe a scene, character, or object, and Dall-E could bring it to life in a virtual environment.

The implications of Dall-E’s revolutionary image generation capabilities in the arena of art and creativity are profound. Galleries filled with AI-produced artworks could become a commonplace reality, stimulating deeper discourse on the boundaries and definitions of art and aesthetic appreciation.

In the realm of cybernetic prosthetics, Dall-E could potentially augment the natural world by generating visual outputs in response to spoken or thought-based commands. Imagine a visually-impaired person using a Dall-E powered prosthetic eye to ‘see’ a visualisation of the spoken description of their surroundings.

Yet, with every advancement, there is an implicit duty to scrutinize the technology’s misuse potential, especially where AI is concerned. Unbridled use of imaging AI could lead to the proliferation of deepfakes, further blurring the lines between reality and digital fabrications. It underscores the urgent necessity for essential regulatory frameworks to mitigate misuse.

Ultimately, the Dall-E model represents a pioneering amalgamation of Natural Language Processing and Machine Vision in AI, carving a unique niche and shaping the discourse of AI’s potential influence on future generations. While the future of AI-triggered advancements continues to unfold, Dall-E offers a fascinating preview of forthcoming epochs when AI might not just augment human creativity but might very well redefine it.

Limitations and Future Developments in Dall-e

Despite these astounding capabilities of Dall-E, one must not overlook its limitations and the questions it raises about the trajectory of artificial intelligence advancements.

Considering Dall-E’s output generation, it displays a degree of randomness that can manifest as a limitation. Although it generates images aligning with textual descriptions, its output is subject to the algorithm’s interpretation, which is fundamentally stochastic and does not guarantee the closest interpretation of the input. This limitation implies that a similar prompt may generate differing outcomes, suggesting a less predictable and consistent result.

Moreover, Dall-E’s capacity to interpret text into images is confined to a 1024×1024 pixel resolution. The significance of this limitation becomes apparent in instances where detailed magnification is necessary or desired; the outputted images can appear pixelated and lacking in specific detail distinctions.

Dall-E’s capabilities are also restricted by the specificity of the prompts. The model can effectively generate images aligning with broader or figurative prompts but struggles with more literal, precise descriptive prompts. These difficulties may present challenges in areas requiring meticulous detail or exactitude, coupled with the precarious possibility of the model making incorrect deductions.

Concerning its training process, Dall-E’s ability significantly relies upon the variety, quality, and accuracy of the data it is trained on. As such, the accuracy, relevancy, and suitability of the output hinge upon the dataset used. The lack of information transparency regarding data compilation and curation leaves room for potential bias and discrepancies in the training data, leading to flawed outputs.

Though the two-phase learning process does contribute to Dall-E’s prowess, it must be acknowledged that the human-guided inputs in the supervised fine-tuning phase are subject to the proprietary nature of data discretion. This aspect raises questions about potential biases, transparency, ethical considerations, and exploitation.

Further, Dall-E’s current inability to understand context, culture, or emotion, challenges its application in fields necessitating a deeper human understanding. These aspects of human discretion yet remain elusive for artificial intelligence.

Looking towards the future, these limitations can be seen as opportunities for improvement. Continued advancements in AI present the potential for heightened interpretive accuracy, enhanced image resolution, and refining Dall-E’s contextual understanding.

Furthermore, the surge in this technology implicates a future where AI does not merely replace or replicate human creativity but distinctly augments it, opening newer domains of innovation and exploration. Continued advancements in Dall-E could see its integration within spheres of art, creativity, design, and beyond, pushing the boundaries of what is deemed plausible.

In conclusion, while Dall-E has revolutionized the intersection of natural language processing and machine vision, it nevertheless presents certain limitations and questions to be navigated. The implications of advancements like Dall-E stress on the crucial need for ethical regulations, transparency, and accountability in this dynamic field of AI. It further infers a future where the convergence of AI and human ingenuity may redefine our conceptions of creativity and innovation.

While Dall-e is truly revolutionary in bridging the gap between textual data and image generation, important ethical questions and challenges undoubtedly emerge. The risk of generating harmful content, perceived limitations in its creativity, and concerns about misuse are among the many facets that need to be addressed. Regardless, it’s clear that this technology’s potential is immense. As we continue to trailblaze the path to artificial intelligence’s future, Dall-e provides a unique glimpse into a world where language processing extends beyond words, opening up new horizons in fields as diverse as gaming, fashion design, advertising, and beyond.