Understanding Deep Learning

Deep Learning is a subset of AI that trains computers to learn like humans. It uses large datasets to teach machines to detect patterns and predict outcomes. Unlike traditional machine learning, deep learning systems often figure things out independently, similar to how a child learns to ride a bike without explicit instructions.

Neural networks, which mimic the human brain's problem-solving approach, are at the core of deep learning. These networks consist of layers:

- An input layer receives raw data

- Hidden layers process it

- An output layer provides the final result

Deep learning excels in automatically discovering the best features from data, reducing much of the manual work involved in traditional machine learning.

A key distinction lies in data handling. Traditional machine learning needs human guidance, while deep learning processes unstructured data autonomously. The machine learns by example, improving with each iteration until reaching high precision. This is achieved through techniques like backpropagation, which adjusts the network's calculations for more accurate output.

The history of deep learning is marked by significant breakthroughs. Early neural networks were simple, but they evolved with new techniques solving increasingly complex problems. Introduced by pioneers from the 1940s through the '90s, deep learning has progressed rapidly, with developments like convolutional neural networks expanding its capabilities in processing images, text, and sound.

Deep Learning Techniques and Models

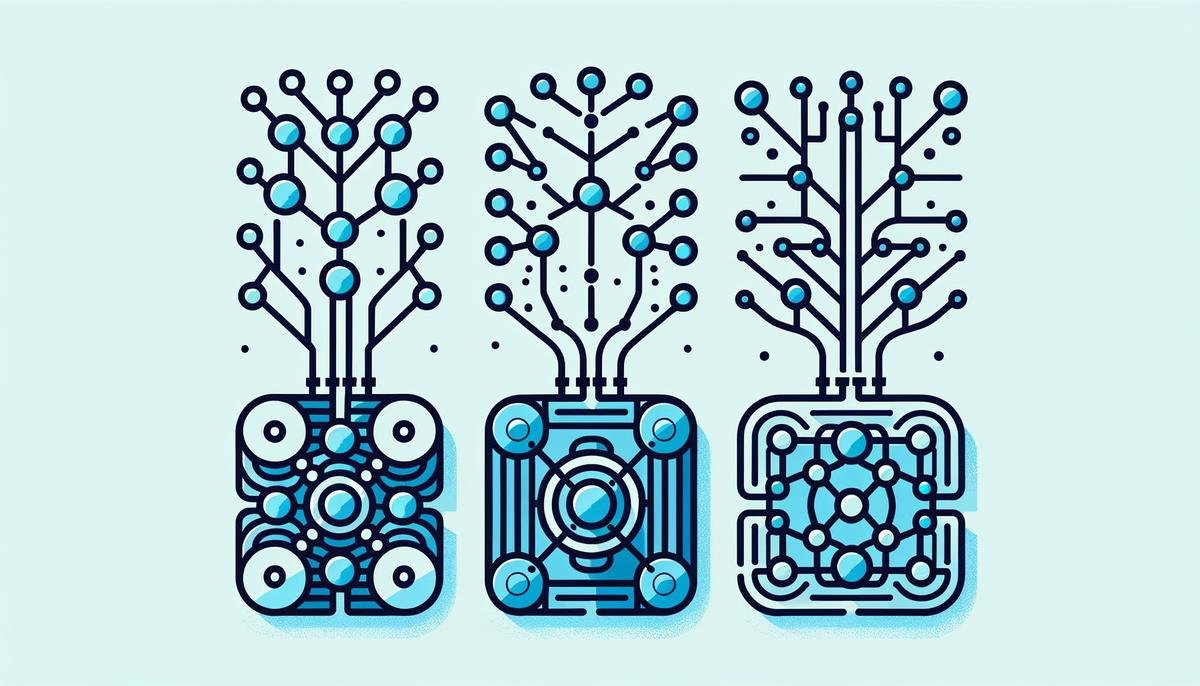

Deep learning's strength lies in its diverse models and techniques, each designed for specific data types and tasks. For image-related work, Convolutional Neural Networks (CNNs) are paramount. They function by identifying patterns and features within grid-like data such as images. CNNs' architecture involves:

- Convolutional layers to detect features

- Pooling layers to downsample data

- Fully connected layers to make final predictions

For sequence-based tasks, Recurrent Neural Networks (RNNs) take center stage. RNNs handle data that unfolds over time, such as text or audio, by processing data sequentially and maintaining a "memory" of previous inputs. This feature makes RNNs effective for language translation, speech recognition, and sentiment analysis. Newer architectures like Long Short-Term Memory (LSTM) networks address challenges faced by traditional RNNs, such as vanishing gradients.

Generative Adversarial Networks (GANs) create entirely new data. They consist of a generator network that creates data and a discriminator network that critiques it. This setup drives the generator to improve until it produces data indistinguishable from the real thing. GANs have transformed fields like image enhancement and style transfer.

These deep learning models often work together. Transfer learning allows a pre-trained model to adapt to a different, yet similar, task with minimal adjustment. Ensemble techniques can combine various models' strengths for superior performance.

Applications of Deep Learning

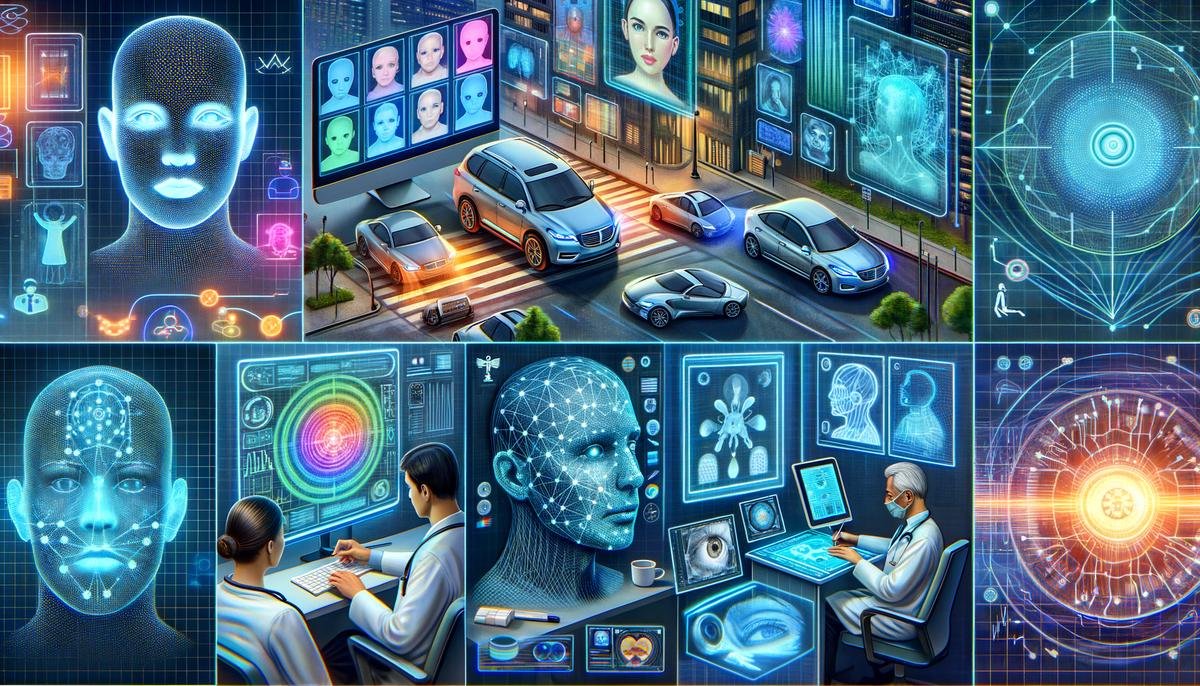

Deep learning is transforming numerous industries. In image recognition, it excels at classifying and identifying objects, faces, and scenes with high precision. This capability is vital for security systems, photo management apps, and more.

In natural language processing (NLP), deep learning enables more intuitive interactions between humans and machines. Chatbots and virtual assistants become more contextually aware and accurate, improving communication and allowing seamless voice-driven experiences.

The automotive industry uses deep learning to drive autonomous vehicles. Self-driving cars process data from various sensors to interpret their surroundings and make driving decisions. This technology improves vehicle safety and contributes to reducing traffic congestion and increasing fuel efficiency.

In healthcare, deep learning improves disease detection accuracy, from identifying tumors in radiology scans to predicting genetic disorders. By processing vast datasets from medical records, it aids in early diagnosis and improves personalized treatment plans.

"By automating complex tasks previously requiring human intelligence, deep learning expands technology's reach and capabilities across various fields."

Challenges and Limitations

Deep learning faces several challenges and limitations. A primary hurdle is its need for extensive data, which can be difficult and time-consuming to obtain and process, especially in areas with data privacy concerns. The volume of data required means organizations must invest in substantial storage and infrastructure capabilities.

Another significant challenge is the computational cost. Training complex models often requires immense computational power, necessitating high-end hardware like GPUs or TPUs. This can lead to higher operational expenses, which may be prohibitive for smaller organizations or research labs with limited budgets.

Interpretability is also a notable issue. Deep learning models often function as "black boxes," making it difficult to understand their decision-making processes. This lack of transparency can be problematic in sectors requiring accountability, such as finance or healthcare.

Strategies are emerging to address these challenges:

- Transfer learning can reduce data requirements by repurposing pre-trained models for new tasks

- Optimizing algorithms and developing more efficient architectures helps lower computational demands

- Cloud computing offers more scalable and cost-effective ways to access necessary computational resources

To improve interpretability, researchers are exploring methods to clarify deep learning models' decision-making processes, enhancing transparency and trust in AI systems.

Future Directions in Deep Learning

The future of deep learning holds potential innovations that will push boundaries further. Quantum computing could redefine how we approach complex problems, enabling models to process vast datasets with unprecedented speed and efficiency.

The integration of deep learning with the Internet of Things (IoT) is another significant trend. This development could transform industries such as agriculture and retail, where smart applications can process data in real-time at the edge.

Deep learning is making progress in areas like climate modeling and materials science. These models could play a crucial role in predicting climate change impacts and discovering new materials with desired properties.

Research on explainable AI, focusing on transparency and interpretability, is becoming more prevalent. This area is crucial in sectors where understanding decision processes is vital.

AutoML, or automated machine learning, is gaining traction, streamlining the creation and tuning of complex models without extensive expertise. This could lead to wider adoption of deep learning techniques across various fields.

Deep learning's application in personalized education is growing, with the potential to create customized learning experiences that adapt to individual student needs.

Ongoing research is exploring the fusion of deep learning with reinforcement learning, which could yield more dynamic AI applications capable of improving their performance with each interaction.

Deep learning continues to transform industries and redefine possibilities. As technologies mature, they will reshape human-machine interactions and industries worldwide, underscoring deep learning's impact on modern life.

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436-444.

- Schmidhuber J. Deep learning in neural networks: An overview. Neural Networks. 2015;61:85-117.

- Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016.

- Silver D, Huang A, Maddison CJ, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529:484-489.

- He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016:770-778.