Revolutionizing Image Generation with DALL-E 3

The digital age has ushered in a myriad of advancements, with Artificial Intelligence (AI) standing at the forefront of this technological revolution. From self-driving cars to virtual personal assistants, AI has permeated virtually every aspect of our lives. One of its most intriguing applications lies in the realm of image generation. Imagine a world where machines don’t just interpret images but create them, conjuring visuals from mere textual descriptions. This isn’t the stuff of science fiction anymore but a reality made possible by models like OpenAI’s DALL-E.

In its quest for perfection, the AI community continually seeks to refine and enhance existing models. A recent breakthrough from OpenAI reveals a pivotal aspect that can significantly impact the performance of image generation models: the quality of captions used during training. This revelation has catalyzed the development of DALL-E 3, an evolved version promising more accurate and detailed image outputs.

Background: DALL-E’s Legacy

To truly appreciate the significance of DALL-E 3, one must first understand its predecessor. DALL-E, a neural network-based model developed by OpenAI, was groundbreaking in its ability to generate unique, often whimsical images from textual prompts. This marvel of technology could take a phrase as abstract as “a cubist painting of a pineapple” and bring it to life digitally. Its applications spanned a wide range, from generating art to assisting in design and visualization tasks across various industries.

Yet, as with all pioneering technologies, DALL-E had its limitations. While it excelled in many scenarios, it sometimes faltered when given intricate or highly detailed descriptions. This inconsistency between user expectations and generated outputs presented a challenge that researchers were eager to address.

The Challenge with Current Models

Generative AI models, despite their potential, are only as good as the data they’re trained on. Traditional text-to-image models have demonstrated commendable capabilities, creating visuals from textual descriptions that seemed impossible just a few years ago. However, they often stumbled when the descriptions became too intricate.

Consider an example where a user prompts the model with “a serene landscape with a babbling brook, cherry blossom trees in full bloom, and a setting sun casting a golden hue over everything.” While one would envision a picturesque scene, the model might miss out on several elements, perhaps overlooking the cherry blossoms or the golden hue from the setting sun.

The root cause of such discrepancies was identified to be the training data, specifically the captions used to guide the models. The quality, accuracy, and descriptiveness of these captions were paramount in determining the model’s proficiency in generating accurate images.

The Power of Improved Captions

Recognizing the pivotal role of captions, OpenAI’s research team embarked on an ambitious project: refining the training data to enhance DALL-E’s capabilities. Their hypothesis was straightforward yet profound. By improving the quality of captions, they believed they could significantly elevate the model’s performance.

To achieve this, a dedicated image captioner was trained to produce more accurate and detailed descriptions of images. This new set of enriched captions served as a refined training dataset. Models trained on this dataset exhibited a marked improvement in their ability to generate images that were not only accurate but also more contextually relevant and aesthetically pleasing.

Unveiling the Phenomenon: DALL-E 3

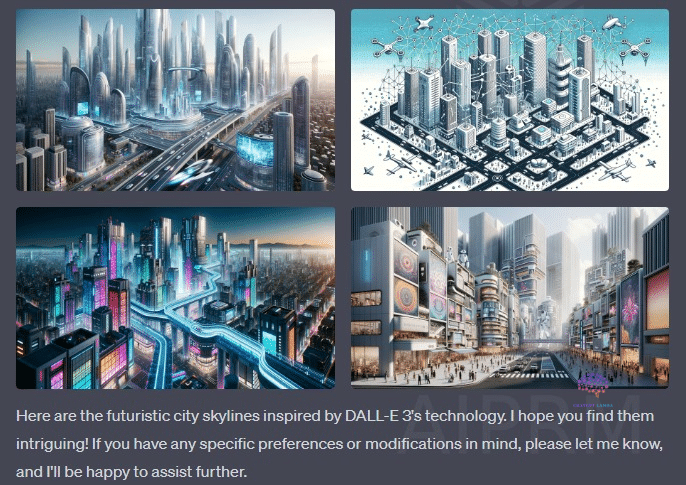

With insights gleaned from rigorous research, OpenAI unveiled the prodigious DALL-E 3. Trained on refined captions, this model redefined benchmarks in image generation. Its adeptness in deciphering and acting upon intricate prompts was unmatched, churning out images that resonated with coherence, contextual relevance, and visual allure.

Comparative analyses showcased DALL-E 3’s supremacy, outshining its peers and even its precursor. Be it adhering to elaborate prompts, ensuring visual harmony, or simply crafting images that captivate, DALL-E 3 excelled across the board. With this innovation, the bridge between human imagination and AI-generated visuals has become more robust and nuanced.

The odyssey of AI, especially within image generation, mirrors humanity’s unyielding spirit and the ceaseless pursuit of excellence. Transitioning from rudimentary digital sketches to today’s sophisticated visuals, our progress has been monumental. DALL-E 3 epitomizes this journey, seamlessly fusing textual narratives with digital creation. As we stand on the brink of this novel era, we’re left in awe and anticipation: what marvels await us next? If DALL-E’s narrative is any indicator, the horizon promises wonders beyond our wildest dreams.