What Are Neural Networks?

A neural network is a computational model inspired by the human brain's structure. It consists of interconnected nodes or neurons arranged in layers:

- Input Layer: Receives initial data.

- Hidden Layers: Process information and extract patterns. Neurons here have weights and biases, with activation functions determining their output.

- Output Layer: Provides the final result or prediction.

Neural networks learn by adjusting the connections between neurons based on the accuracy of their predictions. This process often involves backpropagation, where errors are used to update the network's weights. Through training, neural networks can identify patterns, make predictions, and perform complex decision-making tasks.

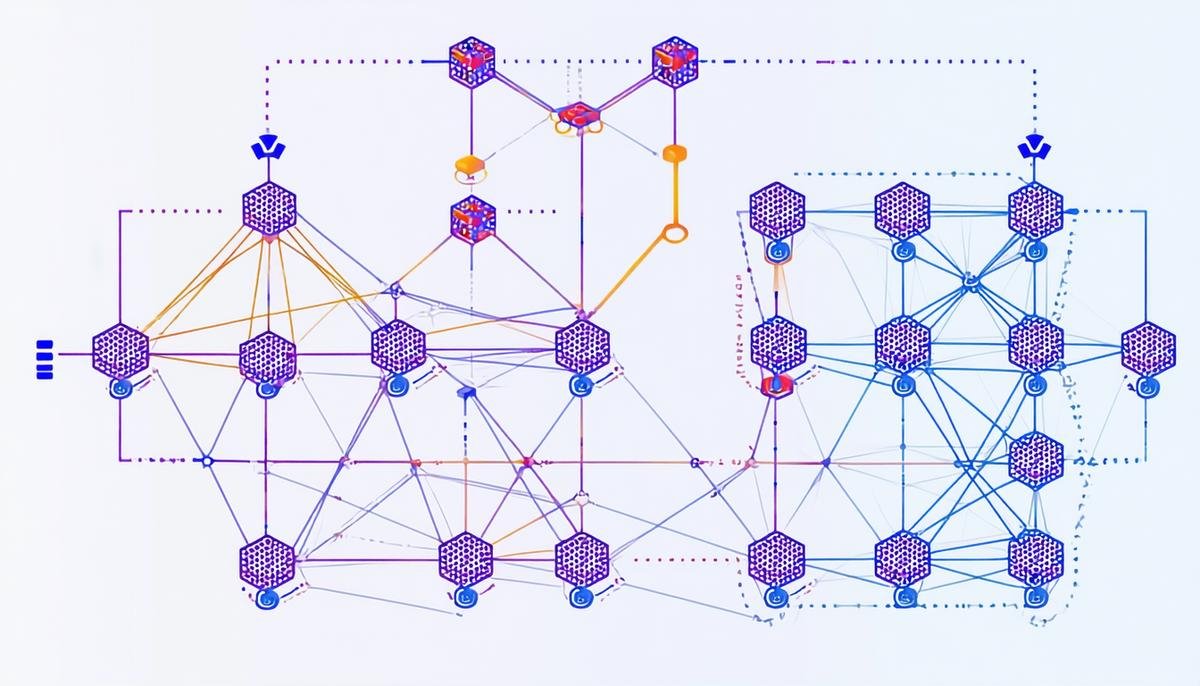

Types of Neural Networks

Various types of neural networks are designed for specific tasks:

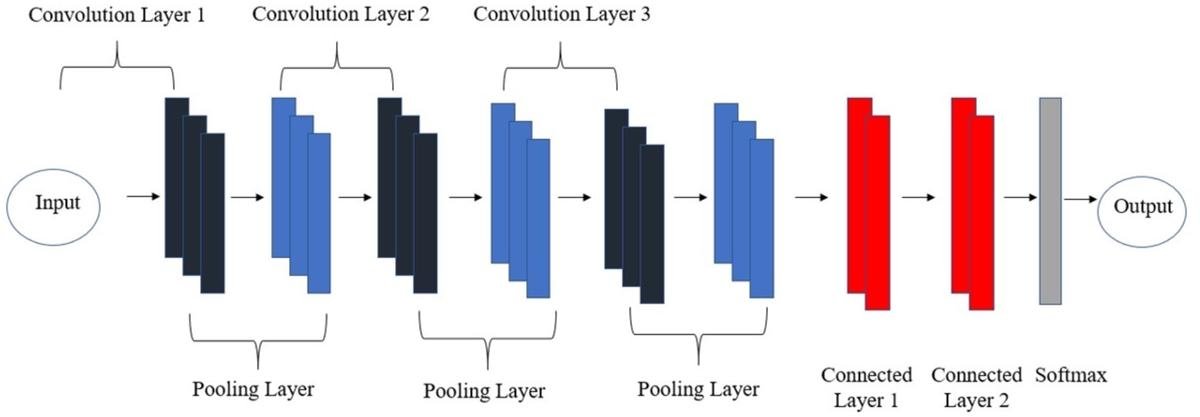

- Feedforward Neural Networks (FNNs): The simplest type, where data flows in one direction. Suitable for static input-output mapping tasks like image classification.

- Convolutional Neural Networks (CNNs): Excel in image processing and computer vision. They break down images into smaller parts to identify patterns and features.

- Recurrent Neural Networks (RNNs): Designed for sequential data tasks, such as language modeling and time-series prediction. They can remember past inputs, making them effective for context-dependent tasks.

- Generative Adversarial Networks (GANs): Consist of a generator and a discriminator, used for creating realistic data. Applications include generating images, music, and game design elements.

Each type of neural network offers unique advantages for particular use cases, contributing to various fields of artificial intelligence.

How Neural Networks Learn

Neural networks learn through a process involving:

- Forward propagation: Input data passes through the network, transformed by weights, biases, and activation functions.

- Loss calculation: The difference between predicted and actual output is measured using a loss function.

- Backpropagation: The error is propagated backward, calculating gradients for each weight.

- Weight updates: Optimization algorithms adjust weights to reduce overall error.

Activation functions introduce non-linearity, enabling the network to learn complex patterns. This process allows neural networks to improve their performance with each iteration, learning to make accurate predictions and decisions.

Applications of Neural Networks

Neural networks are applied across various industries:

- Medical field: Analyze medical images for disease detection and diagnosis.

- Autonomous vehicles: Process sensor data to navigate and make decisions in real-time.

- Speech recognition: Power virtual assistants and transcription services, adapting to diverse speech patterns.

- Finance: Predict stock trends and assess credit risks.

- Entertainment: Curate personalized content recommendations.

- Retail: Forecast demand and optimize stock levels.

- Manufacturing: Perform predictive maintenance to minimize equipment downtime.

These applications demonstrate how neural networks are enhancing efficiency and capabilities across different sectors, leading to improved processes and user experiences.

Challenges and Future Directions

Neural networks face several challenges:

- High computational resources: Training requires significant processing power and energy.

- Interpretability: The "black box" problem makes it difficult to understand how networks reach their conclusions.

Ongoing research aims to address these issues:

- Developing efficient training algorithms and techniques like pruning, quantization, and neural architecture search.

- Exploring explainable AI (XAI) methods to increase transparency in decision-making processes.

As these challenges are addressed, neural networks are likely to become more accessible and integrated into various aspects of life, serving as powerful tools for automation, prediction, and innovation.

Neural networks continue to evolve, offering new capabilities in data analysis and decision-making across various fields. Their ongoing development promises to further enhance our ability to tackle complex problems and derive meaningful insights from data.

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436-444.

- Schmidhuber J. Deep learning in neural networks: An overview. Neural Networks. 2015;61:85-117.

- Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016.